Come along with me on a journey as we delve into the swirling, echoing madness of identity attacks. Today I present a case study on how different implementations of OAuth 2.0, the core authentication schema used in most identity providers, can have radically different attack surfaces.

The subjects for today’s case study are two scrappy up-and-coming tech shops straight outta Silicon Valley: Microsoft and Google. Perhaps you’ve heard of them?

Let‚Äôs look at how one of my favorite identity attacks, Device Code Phishing, stacks up between these two identity providers. By the end of this blog, you, dear reader, will appreciate how the OAuth 2.0¬Ýimplementation compares between these two identity providers and how that directly facilitates or negates the blast radius of this extremely over-powered attack.

Ready to get nerdy with it? Let’s get nerdy with it.

Before we understand Device Code Phishing, we need to understand the feature that allows this attack to exist in the first place. Let’s begin with an implementation independent breakdown of the feature in question and how it's exploited. Time to meet the device code flow, aka the device authorization grant!

I read so you don’t have to. Though I’d recommend it if you’re looking for a sleep aid.

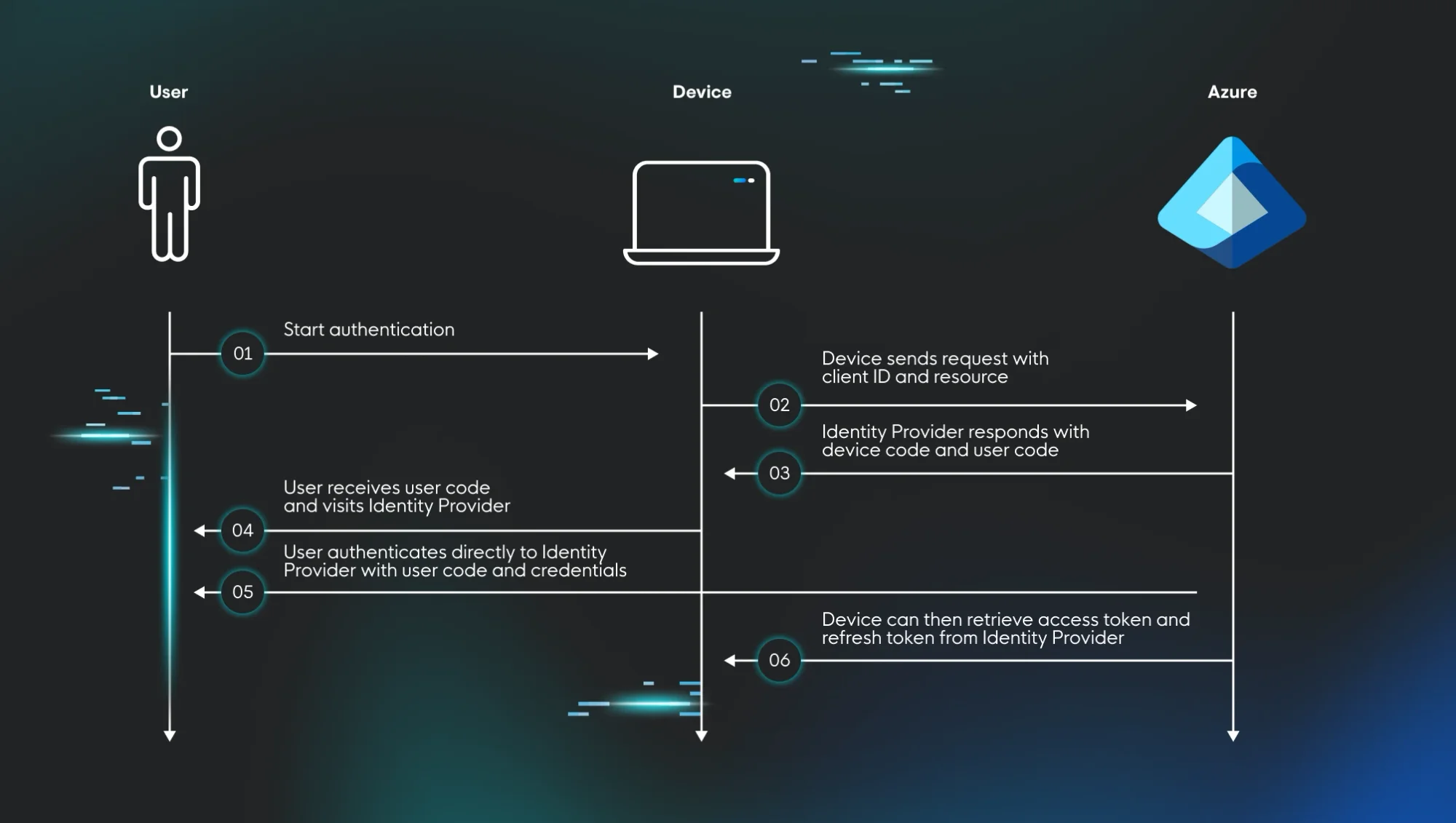

The device authorization grant is used in scenarios where one wishes to authenticate an internet-connected device that lacks a traditional input mechanism like a keyboard, web browser, or the like. The RFC calls these input constrained devices. Let‚Äôs imagine your average IoT printer, smart TV, WiFi-enabled toaster with a touch screen, or any other kind of device that's internet connected but lacks a full featured user interface.¬Ý

If you want to authenticate your WiFi-enabled toaster into your cloud tenant (and, really, who wouldn’t want the thrill of doing that?), you have an issue on your hands. You can’t browse to the login page of your tenant from your toaster and input your username and password. But let’s imagine in this scenario that your toaster has a touch screen where you could input a six digit code. You can’t browse to the login page and type in your username and password, so how could you authenticate the toaster?

The device code flow allows you to:

This effectively solves the problem of authenticating input-constrained devices. Huzzah! Now you can join your toaster to your tenant.¬Ý

If this sounds extremely niche, it‚Äôs because it is. This feature of OAuth has a narrow application and is only used in a handful of scenarios by certain types of users. Put simply, your average user likely will not need to know about the device code flow.¬Ý

If hackers are good at anything, it’s finding niche features like the device code flow and pivoting this one-off authentication method into a vector of initial access. So let’s talk about phishing with device codes!

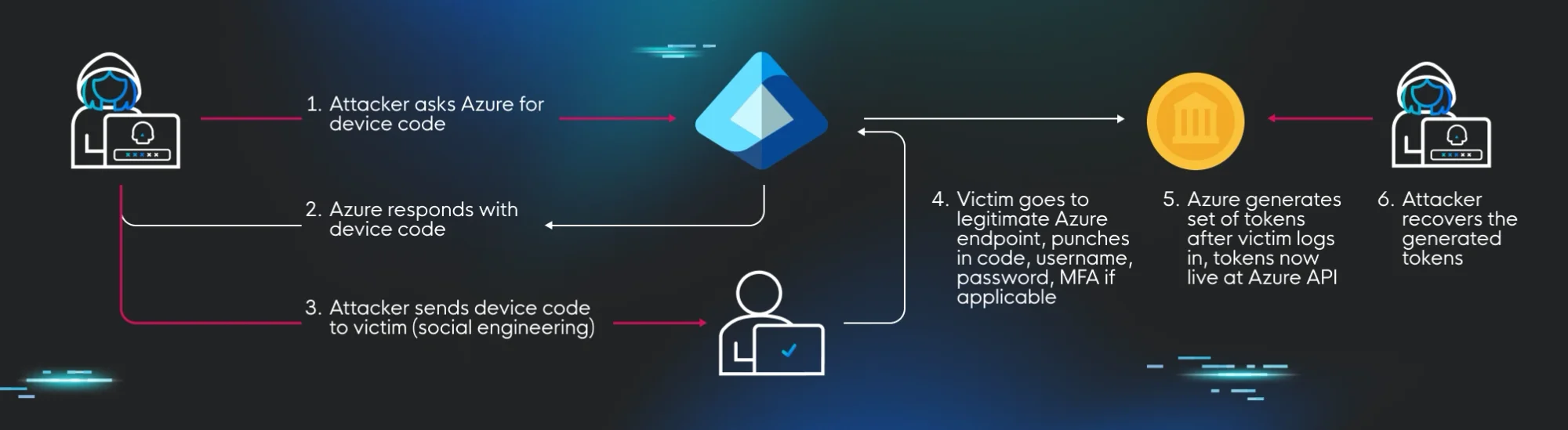

Phishing by exploiting the device code flow is nothing new. Some of the great identity researchers of our time have already written on the subject, including , , and (@inversecos). By applying some social engineering finesse, the device code flow can be co-opted and used maliciously to generate an access token to some resource that can then be recovered by the attacker.

Here’s how it goes down:

Let’s look at a concrete example where the Azure device code flow is used to perform this attack.

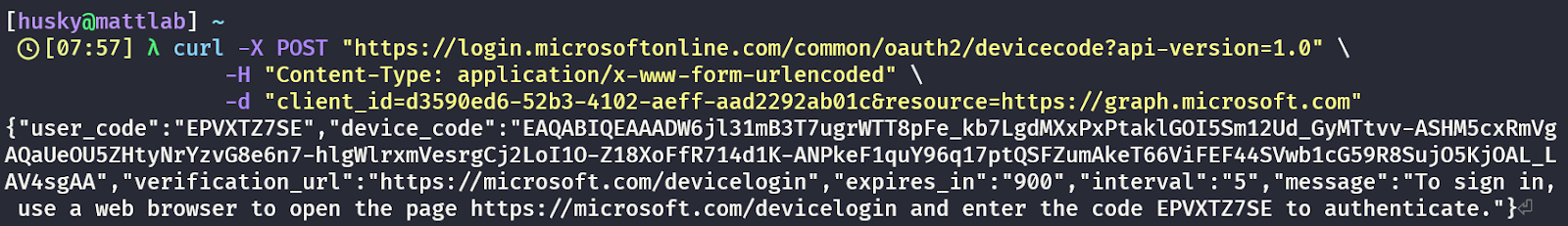

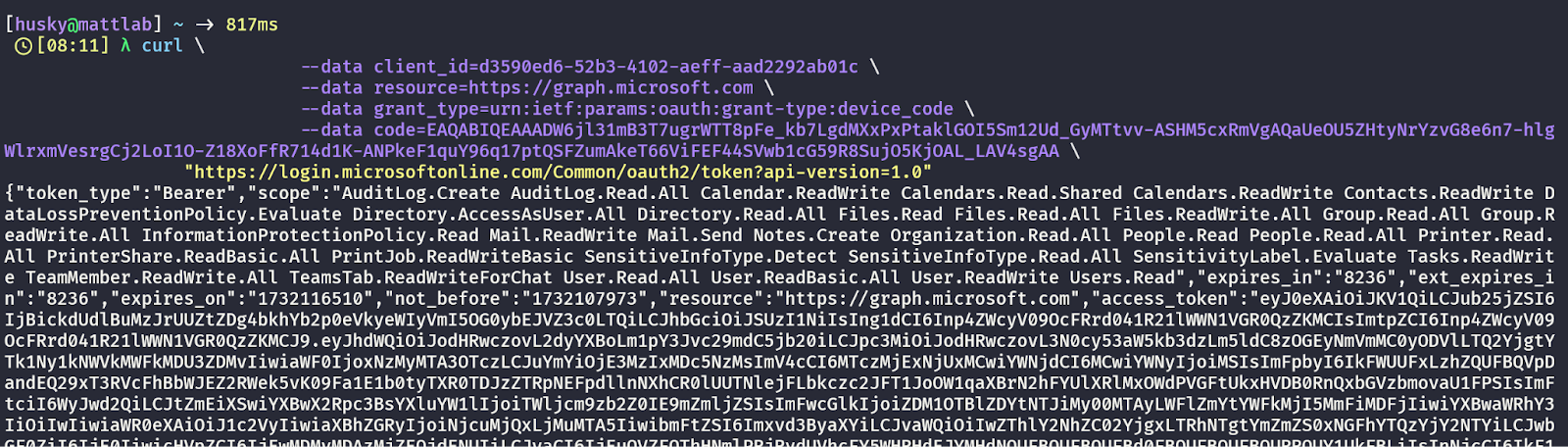

First, the attacker requests the device code. This is as simple as cURLing the Azure device code flow API. Remember: as an attacker, I can just do this. I don’t need to authenticate or prove who I am. As long as I structure the request correctly, the API will hand over a device code to start the process. The cURL request would look something like this…

‚Ķw≥Û±∞˘±:

If the cURL request is correctly formatted, the API responds with a JSON blob containing the device code, the user code, and some helpful instructions about how to use them:

At this point, in a legitimate device code authentication scenario, the user would move from this input-constrained device over to a computer with a web browser, browse to , and sign in using their web browser. But in the attack scenario, the attacker crafts a convincing phish that instructs the user to go to the same device code login page, sign in, and await further instructions.¬Ý

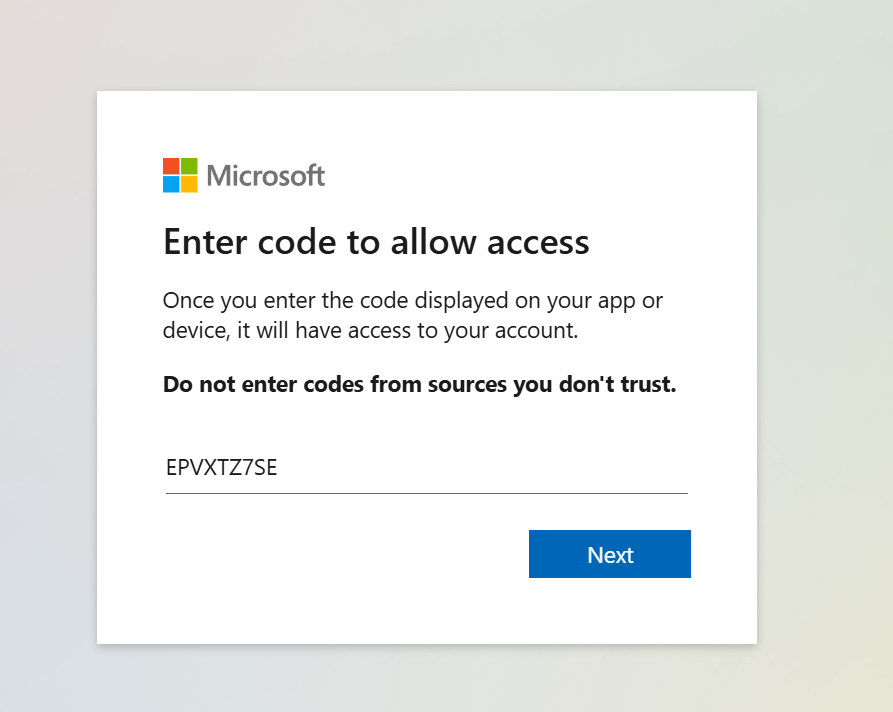

Let’s assume that the attacker has sent this phish with a convincing pretext, so the victim browses to and enters the provided code, duly ignoring the warning about inputting codes from untrusted sources…

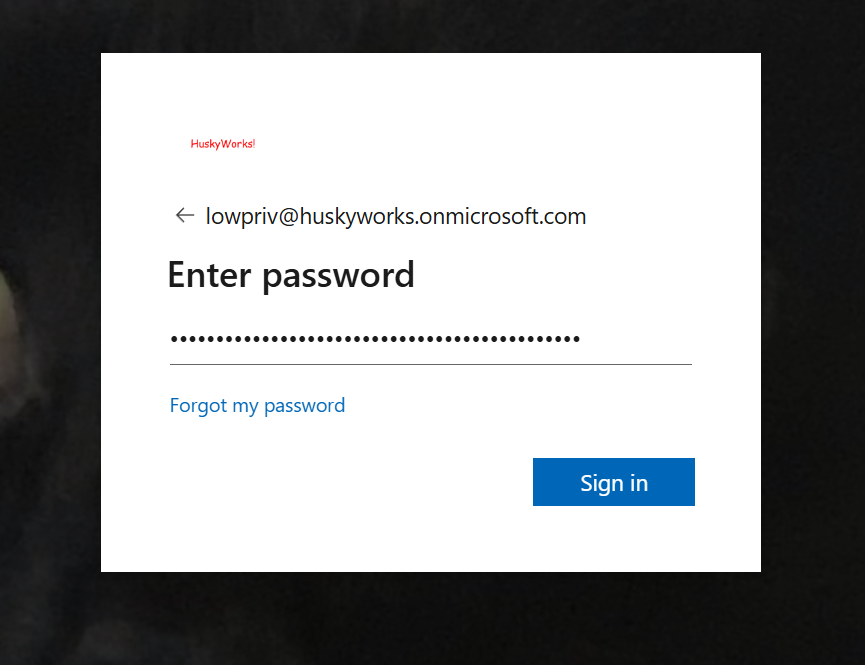

The page then prompts the victim for a username and password…

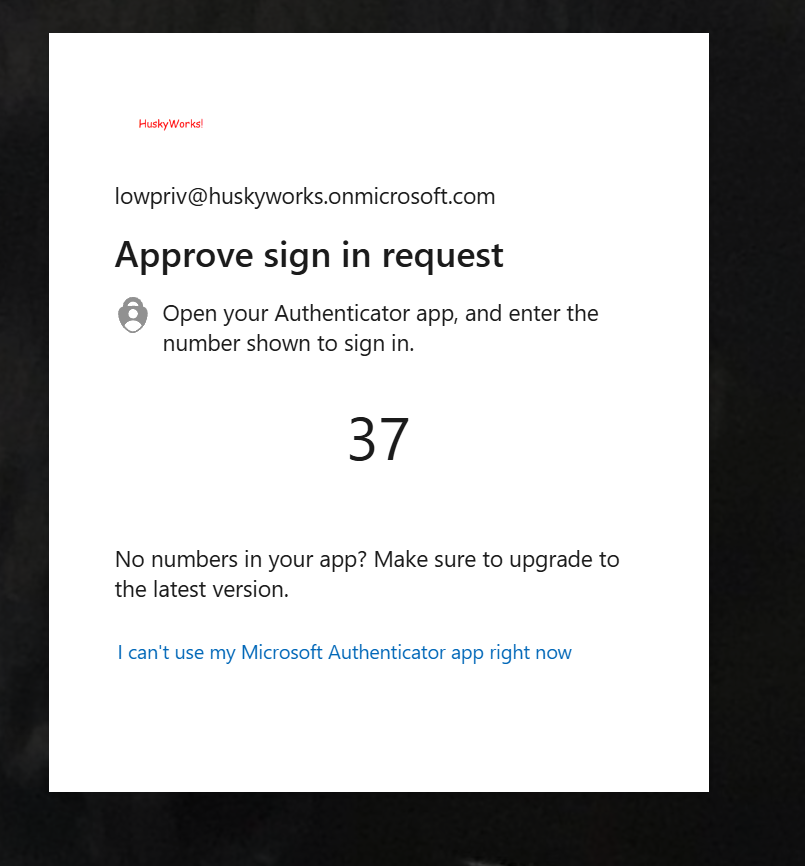

… and moves onto the MFA check, which the victim obliges…

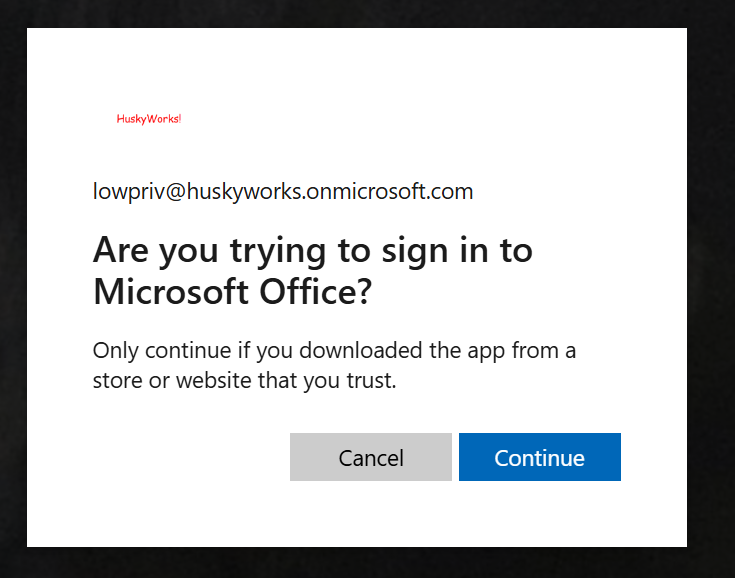

The page then renders one final question asking if the victim is trying to sign in to Microsoft Office, which the user assumes is routine and clicks continue…

And finally, the victim sees this (Figure 9):

Which, of course, is extremely confusing if you’re the one who fell victim to this attack.

Meanwhile, elsewhere, the attacker constructs another cURL command to poll the Azure authentication API:

‚Ķw≥Û±∞˘±:

Once the user has fallen victim to the phish, their authentication generates a set of tokens that now live at the OAuth token API endpoint and can be retrieved by providing the correct device code. The attacker, of course, knows the device code because it was generated by the initial cURL request to the device code login API. And while that code is useless by itself, once the victim has been tricked into authenticating, the resulting tokens now belong to anyone who knows which device code was used in the original request.

A successful attack results in the attacker gaining an access token and a refresh token which are effective for the phished victim. The tokens are effective for a requested resource (in this case, the Graph API) and scoped to a set of OAuth permissions that allow the attacker to access resources as if they were the phished victim. Attack complete, initial access gained, cybercriminal happy!

There are a few things that make this attack insidious.¬Ý

First, this attack uses the legitimate authentication flow instead of exploiting some vulnerability or bug. The entire phishing context stays on legitimate Microsoft infrastructure, using legitimate Microsoft APIs, to perform the legitimate (if not hijacked) device code authentication flow. There’s no true exploitation occurring during this attack outside of tricking someone into using the device code flow when they shouldn’t.

Second, due to the previous point about using legitimate authentication functionality, the attacker gets to use legitimate URLs during the phishing portion. A user who is up on their Security Awareness Training might know to be rightfully suspicious of links, but may let their guard down when they see as the URL. I‚Äôm not defanging this URL in the text of this blog for a reason:¬Ýit‚Äôs 100% totally legitimate, benign, and safe to visit that site. Through the entire example documented above, I never once had to send the victim to some sketchy site and have them punch in their credentials. So imagine how powerful it is when you, as the attacker, get to use a legitimate Microsoft URL for your phishing attack.

Finally (and most importantly), the attacker controls the specific type of resource and token that's generated due to this attack. In the example above, the attacker creates a device code that is scoped to the Graph API and specifies a client ID. Remember how I said to remember the client ID because it would be important later? Well…

...the client ID in the above example isn't some random string of characters. In fact, Microsoft‚Äôs OAuth implementation includes an undocumented set of client IDs that are freely available for anyone to use during authentication flows. This is called the and was discovered recently by Secureworks researchers. This subject is quite complicated, but to summarize it briefly: the combination of a client ID and the requested resource generates a token that can be scoped to different things.¬Ý

For example, the client ID used in the above example corresponds to the Microsoft Office client. When the token is generated for the Graph API, the resulting token can be used to call the Graph API and read the user’s emails, look at their calendar, read their files, and many other things as specified in the scope of the token:

While the Family of Client IDs is fascinating, it’s largely outside the scope of this blog post. But the two key takeaways are:

To briefly illustrate the power of letting the attacker control the resource and client ID during authentication, look no further than in which he demonstrates how an attacker can leverage device code phishing to generate a Primary Refresh Token and steal it for initial access. Notably, this is possible because the attacker can set the resource of the device code request as https://enrollment.manage.microsoft.com/ and the client ID to the Microsoft Authentication Broker (29d9ed98-a469-4536-ade2-f981bc1d605e). The token generated from successfully phishing a victim with these parameters is the most powerful kind of token in the Azure OAuth token schema. This token can be used, among other things, to sign into web resources, join rogue devices to a tenant, create multi-factor authentication enrollments, and much, much more. It‚Äôs the most powerful token by a country mile and it‚Äôs only a small device code phish away from attacker control!¬Ý

(As an aside, if you’re interested in identity research and red teaming, read every single blog published by Dirk-jan. You will not be disappointed.)

We‚Äôve established how device code phishing is carried out in Azure and that it‚Äôs extremely powerful. The attacker gets control over the specific kind of resource and scope of the resulting tokens. It‚Äôs an attack that lives entirely within the Microsoft ecosystem. At worst, it can be used to steal the most powerful kind of token that exists.¬Ý

But recall that the device code authentication flow isn't an Azure-specific mechanism. Rather, Azure implements device code flow as a method of authentication. The device code flow specification comes from the OAuth 2.0 RFC. Azure is far from the only identity provider implementing device code flow. So I couldn’t help but wonder…

Does this mean that no matter which identity provider you use, device code phishing is equally as dangerous? Do it just be like that?

Spoiler alert! The answer is, surprisingly, NO. And we don’t have to look any further than good ol’ uncle Google to see an example where implementation details have a massive effect on how much damage can be done by this technique.

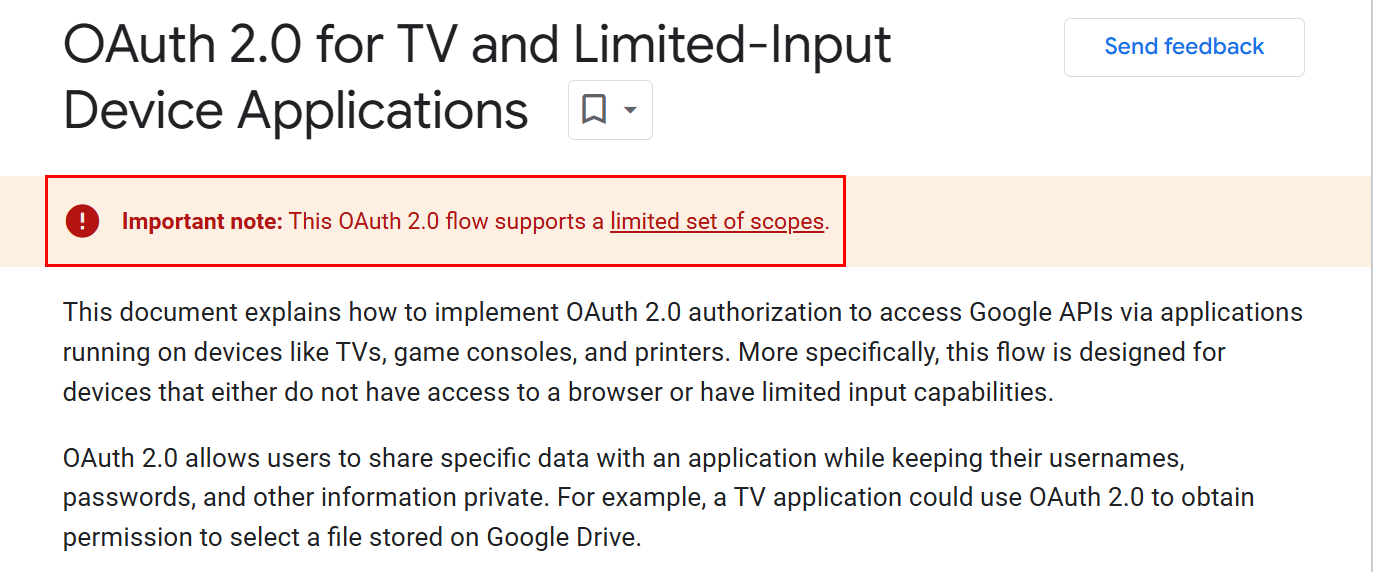

Let’s assume that we’re on a red team engagement against a client that uses Google as their primary identity provider. Let’s try to recreate the device code phishing attack using the Google Workspace/Google Cloud APIs. As always, a great place to start is the . And right away, it becomes clear that we’re going to be fighting an uphill battle:

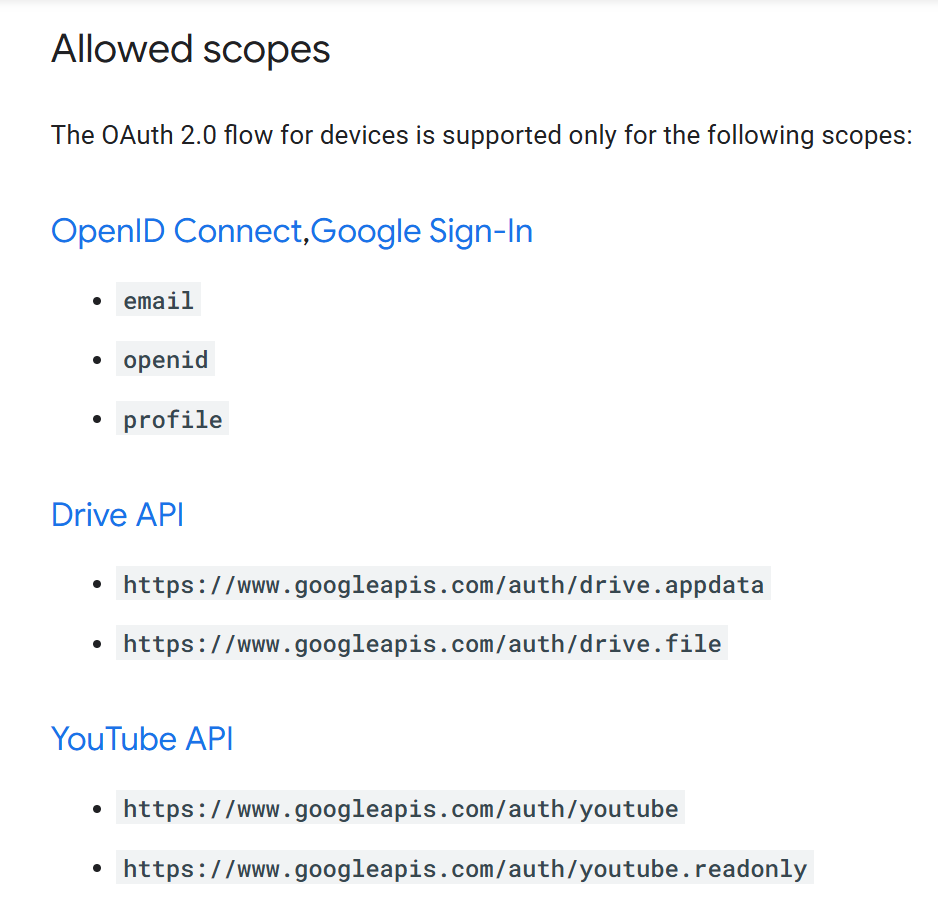

The documentation clearly states that not all scopes are supported by the device code flow. But hackers never give up hope, so let’s examine which scopes are supported:

If you’re familiar with OAuth 2.0 scopes and their general abusability by attackers, you may have just fallen out of your chair. If you’re not familiar, I can summarize it this way: the first three scopes are universal across all implementations of OAuth 2.0 and OpenID Connect (OIDC) and allow for limited, if any, exploitation. The remaining supported scopes are extremely narrow when we’re looking for exploitation primitives.

Like, be so for real with me right now‚Äìin Azure, you can set up a device code phishing attack where the resulting tokens allow you to basically become the victim user, join a rogue device to the victim tenant, sign in to their email and other resources, and other incredibly powerful types of access. And in Google, we can use the GDrive file permission scope to *checks notes* ‚Äúsee, edit, create, and delete only the specific Google Drive files you use with this app.‚Äù¬Ý

Before we’ve even started the attack, Google’s implementation of the device code flow has effectively neutered the attack potential. If any red teamers reading this want to let me know how to pivot the youtube.readonly scope into an account takeover, I’m all ears (not joking, hit me up: matt.kiely[at]huntresslabs.com and I’d love to talk). But I’m not holding my breath.

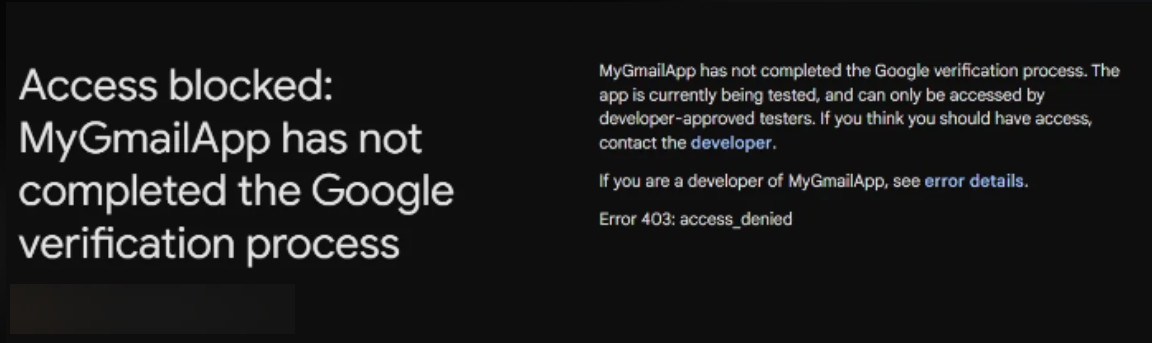

But hope springs eternal, so let’s attempt to carry out the attack anyway. As far as I can tell, there are no public client IDs for Google like there are for Azure. So if we want to execute the attack, we need a client ID. The recommended way to obtain a client ID is to . So if you were hoping for some semblance of anonymity while carrying out the attack, you can throw that out the window.

Even if you go through the trouble of building an OAuth app within Google, there are still other checks and safeguards in place. For example, if your application requires too many powerful permissions, it must be published and verified before anyone can use it:

But that’s not going to be an issue for us, remember? We’re limited to four scopes of permissions: two for GDrive and two for YouTube. We’re not exactly working with powerful exploitation primitives here.

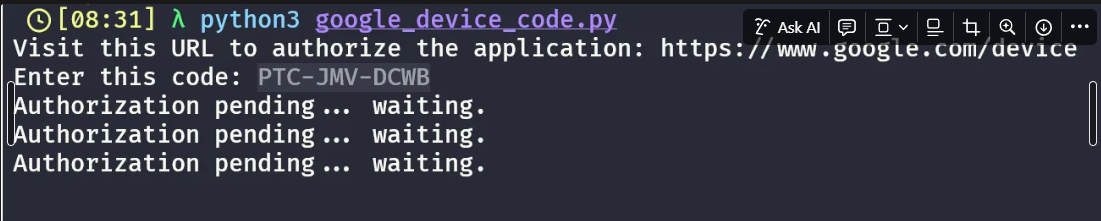

The technical steps to request a device code from Google are largely the same as with Azure. For variety’s sake, we’ll use Python to do the initial request and poll the endpoint until the authentication is complete:

When we run the script, it gives us device code. We ostensibly phish the user and instruct them to authenticate just like with the Azure device code phish attack:

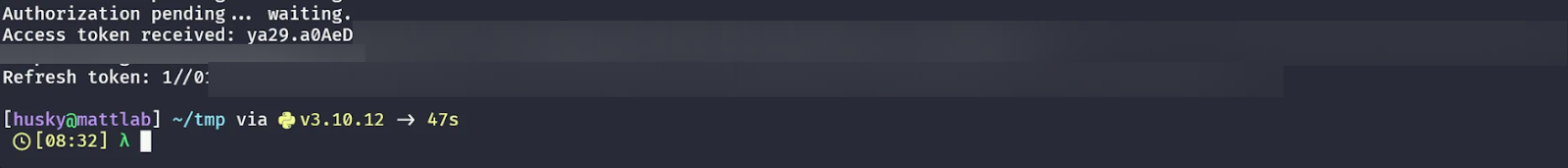

Once the victim punches in their credentials, we get an access token and refresh token! But good luck using either to gain initial access given the limited scope.

Important note: I’ve really only scratched the surface of the attack surface with Google compared to Azure. There may undocumented client IDs, undocumented scopes available for exploitation, and many other possible attack vectors here that are unknown to myself and/or the community. But I think it’s already apparent that the attack vectors in Google Cloud are significantly more limited when compared to their Azure equivalents.

Quite a difference between the two identity providers!

If I could summarize the key takeaway here, it’s this: Google understands that the device code flow is niche and only used in certain scenarios and limits the scopes available to the device code flow accordingly. Microsoft makes no such limitations.

You can imagine why Google chose to implement it this way. The two sets of permissions have to do with GDrive and YouTube. Google understands that the types of client devices that would probably use the device code authentication flow are smart TVs (the documentation even specifies this in the title! “OAuth 2.0 for TV and Limited-Input Device Applications”). It’s not hard to imagine that the kinds of accesses a TV would need center on entertainment – “Hey smart TV, please play my favorite YouTube video!”

But take a moment to appreciate the fact that two implementations of the same OAuth feature have radically different attack surfaces associated with each. Azure‚Äôs lack of restriction on the requested scopes and resources has already led to researchers identifying extremely powerful attack primitives for device code phishing attacks. But Google‚Äôs implementation puts severe restrictions on the same kind of attack.¬Ý

While it’s unclear if other identity attacks (consent grant attacks, rogue device join, etc) are as effective in Google as they are in Azure, we can safely conclude that device code phishing is severely less effective in Google Cloud than in Azure.

Same feature. Same design. Different implementations. Radically different attack surfaces! It goes to show you: while all OAuth 2.0 implementations are equal, it turns out that some are just a bit more equal than others.

Get insider access to –”∞…¥´√Ω tradecraft, killer events, and the freshest blog updates.